System Haptics: 7 Revolutionary Ways It’s Transforming Tech

Imagine feeling the tap of a notification, the rumble of a virtual explosion, or the texture of fabric—all through your smartphone. Welcome to the world of system haptics, where touch meets technology in the most immersive way possible.

[ez-toc]

What Are System Haptics? A Deep Dive into Touch-Based Feedback

System haptics refers to the technology that simulates the sense of touch by using vibrations, motions, or forces in electronic devices. Unlike simple vibration motors from the past, modern system haptics deliver precise, context-aware tactile feedback that enhances user interaction across smartphones, wearables, gaming consoles, and even medical devices.

The Evolution from Basic Vibration to Advanced Feedback

Early mobile phones used basic eccentric rotating mass (ERM) motors to produce a single type of vibration—often loud and coarse. These were effective for alerts but lacked nuance. The real shift began with the introduction of linear resonant actuators (LRAs), which enabled faster, more controlled vibrations. Apple’s Taptic Engine, introduced in 2015 with the iPhone 6S, marked a turning point in system haptics by offering finely tuned, localized feedback.

- ERM motors: Slow response, limited control

- LRAs: Faster actuation, energy-efficient

- Piezo actuators: Ultra-precise, used in high-end devices

This evolution allowed system haptics to move beyond mere alerts and into simulating textures, button clicks, and even spatial cues.

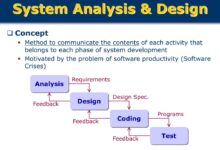

How System Haptics Work: The Science Behind the Sensation

At the core of system haptics is a feedback loop between software, firmware, and hardware. When a user interacts with a touchscreen or controller, the operating system sends a command to the haptic driver, which activates an actuator to produce a specific vibration pattern. These patterns are designed to mimic real-world sensations.

For example, when you press a virtual button on an iPhone, the Taptic Engine produces a short, crisp tap that feels like a physical click—even though no moving parts exist. This illusion is achieved through precise timing, amplitude control, and waveform shaping.

“Haptics is the silent language of interaction—when done right, users don’t notice it, but they feel it.” — Dr. Karon MacLean, Haptics Researcher, University of British Columbia

The Role of System Haptics in Smartphones and Mobile Devices

Smartphones are the most widespread platform for system haptics, where they enhance everything from typing to navigation. Leading manufacturers like Apple, Samsung, and Google have invested heavily in refining tactile feedback to improve usability and emotional engagement.

Apple’s Taptic Engine: Setting the Gold Standard

Apple’s implementation of system haptics through the Taptic Engine is widely regarded as the industry benchmark. Found in iPhones, Apple Watches, and MacBooks with Force Touch trackpads, the Taptic Engine uses LRAs to deliver over 20 distinct haptic responses. These include:

- Haptic Touch: Long-press feedback replacing 3D Touch

- Keyboard Taps: Simulated keypresses during typing

- Alerts and Notifications: Customizable pulses for calls, messages, and alarms

The integration with iOS allows developers to access haptic APIs, enabling apps to trigger context-specific feedback. For instance, a meditation app might use a soft pulse to guide breathing, while a game uses sharp jolts for in-game events.

Android’s Approach to System Haptics: Customization and Control

Android offers a more fragmented but flexible approach to system haptics. While Google’s Pixel series features finely tuned haptic feedback using custom LRAs, OEMs like Samsung, OnePlus, and Xiaomi implement their own variations. Samsung’s Galaxy devices use what they call “vibration motors with multi-layer haptic waveforms” to simulate different textures.

Android 10 introduced the VibrationEffect API, allowing developers to define complex vibration patterns with precise timing and amplitude. This opened the door for richer haptic experiences in apps ranging from messaging to gaming.

One notable example is the Samsung Galaxy S21 Ultra, which uses system haptics to simulate the feel of a camera shutter when taking photos—even though the lens doesn’t physically move. This subtle feedback enhances the user’s sense of control and realism.

Gaming and Virtual Reality: Immersion Through System Haptics

In gaming and VR, system haptics are no longer a luxury—they’re essential for immersion. From console controllers to VR gloves, tactile feedback bridges the gap between digital worlds and physical sensation.

DualSense Controller: Redefining Game Feel

Sony’s PlayStation 5 DualSense controller is a landmark in system haptics. It replaces traditional rumble motors with adaptive triggers and advanced haptic feedback. The adaptive triggers can simulate resistance—like drawing a bowstring or pressing a brake pedal—while the haptic engine delivers nuanced vibrations that reflect in-game environments.

For example, in Returnal, players feel the crunch of gravel, the splash of rain, and the recoil of weapons through finely tuned haptic patterns. This level of detail transforms gameplay from visual and auditory to fully sensory.

- Adaptive Triggers: Variable resistance based on in-game actions

- HD Haptics: High-definition vibrations with spatial precision

- Contextual Feedback: Matches environmental and emotional cues

According to Sony, the DualSense uses over 100 different haptic profiles, each tailored to specific game scenarios.

Haptics in VR: From Gloves to Full-Body Suits

Virtual reality takes system haptics to the next level with wearable devices that simulate touch across the body. Companies like HaptX, bHaptics, and TeslaTouch are developing gloves and suits that use microfluidic actuators, pneumatic bladders, and electrical stimulation to recreate textures, pressure, and temperature.

HaptX Gloves, for instance, combine force feedback, tactile sensation, and motion tracking to let users “feel” virtual objects. In training simulations for surgeons or factory workers, this realism is critical for muscle memory and safety.

Meta (formerly Facebook) has also invested in haptic wristbands that use ultrasound to create the illusion of touch on the skin without physical contact. This technology, still in development, could enable users to feel virtual buttons or textures mid-air.

Wearables and Health: System Haptics in Smartwatches and Medical Devices

Wearables are among the most intimate platforms for system haptics, delivering silent, discreet feedback directly to the skin. From fitness tracking to medical alerts, haptics play a crucial role in user experience and safety.

Apple Watch: Silent Alerts and Haptic Navigation

The Apple Watch uses system haptics extensively for notifications, fitness coaching, and navigation. Its Taptic Engine delivers a range of taps, pulses, and even Morse code-like patterns to communicate different messages without sound.

For example, during a workout, the watch might tap your wrist to signal the start of a new interval. When navigating, it uses directional taps—left, right, or circular—to guide you without looking at the screen. This is especially useful for cyclists or runners.

Apple also introduced “Mirroring Haptics” in watchOS 9, allowing users to send custom tap patterns to another Apple Watch wearer—a form of tactile communication.

Haptics in Medical and Assistive Technologies

Beyond consumer devices, system haptics are revolutionizing healthcare. Prosthetic limbs now use haptic feedback to restore the sense of touch for amputees. Sensors in the prosthetic hand send signals to actuators on the user’s skin, allowing them to “feel” objects they grasp.

In rehabilitation, haptic exoskeletons help stroke patients regain motor control by providing resistance and guidance during exercises. Similarly, haptic belts and vests are being used to assist visually impaired individuals by converting visual data into tactile cues.

A study published in Science Robotics demonstrated that haptic feedback in robotic surgery systems reduced errors by up to 30%, as surgeons could “feel” tissue resistance through the robotic arms.

Automotive Applications: Safer Driving Through Haptic Feedback

Modern vehicles are integrating system haptics into steering wheels, seats, and pedals to improve safety and driver awareness. With the rise of autonomous driving, haptics serve as a critical communication channel between car and driver.

Steering Wheel Alerts and Lane-Keeping Feedback

Many luxury vehicles, including those from BMW, Mercedes-Benz, and Tesla, use haptic steering wheels to alert drivers of lane departures, blind-spot intrusions, or impending collisions. Instead of relying solely on visual or auditory warnings, the wheel vibrates in specific patterns to convey urgency and direction.

For example, a left-side vibration indicates a vehicle in the left blind spot, while a pulsing pattern warns of forward collision. This reduces cognitive load and allows drivers to react faster.

Haptic Pedals and Autonomous Handover Signals

As semi-autonomous systems become more common, haptics help manage the transition between human and machine control. When the car requests the driver to take over, haptic pedals can pulse or resist to signal the need for immediate action.

Research from the University of Michigan Transportation Research Institute found that haptic alerts reduced takeover time by 25% compared to visual or auditory cues alone. This could be the difference between avoiding a crash and being involved in one.

Future Trends in System Haptics: What’s Next?

The future of system haptics is not just about better vibrations—it’s about creating a full sensory experience. Emerging technologies are pushing the boundaries of what’s possible, from ultrasonic haptics to brain-computer interfaces.

Ultrasonic and Mid-Air Haptics

Ultrasonic haptics use focused sound waves to create tactile sensations in mid-air. Companies like Ultrahaptics (now part of Haptx) have developed systems that allow users to feel virtual buttons, sliders, or textures without touching a screen.

This technology is being explored for use in automotive dashboards, where drivers can control infotainment systems without taking their eyes off the road. It’s also being tested in public kiosks to reduce germ transmission.

AI-Driven Personalized Haptics

Artificial intelligence is beginning to personalize haptic feedback based on user behavior, preferences, and even emotional state. Machine learning models can analyze how a user responds to different haptic patterns and adjust them in real time.

For example, a smartwatch might learn that a user prefers stronger vibrations in noisy environments or softer taps during sleep tracking. AI could also generate haptic “signatures” for different contacts—so you’d know who’s calling just by how your phone taps your palm.

Integration with AR and the Metaverse

As augmented reality (AR) and the metaverse evolve, system haptics will be essential for grounding digital experiences in physical reality. Imagine feeling the texture of a virtual fabric in an online store or the recoil of a weapon in a multiplayer AR game.

Companies like Meta and Microsoft are investing in haptic wearables that sync with AR glasses. Microsoft’s HoloLens 2 already supports hand tracking, and future versions may include haptic feedback to simulate object interaction.

Challenges and Limitations of Current System Haptics

Despite rapid advancements, system haptics still face technical and practical challenges. Power consumption, hardware size, and user perception variability are among the biggest hurdles.

Power Efficiency and Battery Life

Haptic actuators, especially high-fidelity ones, can consume significant power. In wearables and mobile devices, this can impact battery life. Engineers are working on low-power haptic drivers and predictive haptics that only activate when necessary.

For example, some smartphones now use AI to predict when haptic feedback is needed and disable it during idle periods.

Standardization and Fragmentation

Unlike audio or video, there’s no universal standard for haptic feedback. Each manufacturer uses proprietary hardware and software, making it difficult for developers to create consistent experiences across platforms.

Organizations like the World Wide Web Consortium (W3C) are working on a Haptics API standard to enable cross-platform haptic content. This could allow websites to trigger haptic effects on compatible devices, much like they play audio or video.

User Sensitivity and Accessibility

Not all users perceive haptics the same way. Age, skin sensitivity, and medical conditions can affect how vibrations are felt. Some users may find haptics distracting or even uncomfortable.

Future systems will need adaptive calibration—allowing users to fine-tune intensity, duration, and pattern based on personal preference. Accessibility features, such as haptic subtitles for the hearing impaired, are also being explored.

How Developers Can Leverage System Haptics

For app and game developers, system haptics offer a powerful tool to enhance user engagement. With the right tools and design principles, haptics can make digital interactions more intuitive and satisfying.

Platform-Specific Haptic APIs

Both iOS and Android provide robust APIs for integrating system haptics:

- iOS:

UIFeedbackGeneratorclasses (Impact, Notification, Selection) - Android:

VibrationEffectandHapticFeedbackConstants - Unity: Input System package with haptic support for game controllers

These APIs allow developers to trigger predefined haptic patterns or create custom waveforms.

Best Practices for Haptic Design

Effective haptic design follows several key principles:

- Context Relevance: Haptics should match the user action (e.g., a soft tap for a menu selection, a strong jolt for an error).

- Minimalism: Avoid overuse—too many vibrations can be annoying or desensitizing.

- Consistency: Use the same haptic pattern for the same action across the app.

- Accessibility: Allow users to disable or adjust haptics in settings.

Apple’s Human Interface Guidelines recommend using haptics to “reinforce user actions, not replace visual feedback.”

Case Study: Haptics in Mobile Gaming

Mobile games like Genshin Impact and PUBG Mobile use system haptics to enhance immersion. When a character casts a spell or fires a weapon, the phone delivers a corresponding vibration. Some games even sync haptics with music beats in rhythm-based levels.

Developers report that games with well-implemented haptics have higher user retention and engagement. According to a Unity Technologies survey, 68% of players prefer games with haptic feedback, and 42% are more likely to make in-app purchases.

Conclusion: The Silent Revolution of System Haptics

System haptics may operate in the background, but its impact is anything but subtle. From making smartphones feel more responsive to enabling life-changing medical devices, haptic technology is reshaping how we interact with the digital world. As AI, VR, and AR continue to evolve, system haptics will become even more integral—transforming touch from a simple sensation into a sophisticated language of interaction.

What are system haptics?

System haptics are technologies that simulate the sense of touch through vibrations, motions, or forces in electronic devices, enhancing user interaction with tactile feedback.

How do system haptics work in smartphones?

They use actuators like linear resonant actuators (LRAs) or piezo motors to produce precise vibrations. These are controlled by software to simulate clicks, textures, or alerts based on user actions.

Which devices use advanced system haptics?

Apple’s iPhone and Apple Watch (Taptic Engine), Sony’s PS5 DualSense controller, Samsung Galaxy devices, and VR systems like HaptX Gloves are leading examples.

Can haptics improve accessibility?

Yes. Haptics help visually impaired users navigate interfaces, assist amputees in feeling through prosthetics, and provide silent alerts for hearing-impaired individuals.

Are there standards for system haptics?

Not yet widespread, but organizations like W3C are developing universal haptic APIs. Currently, most haptic systems are proprietary to manufacturers.

The silent revolution of system haptics is just beginning. As technology becomes more embedded in our lives, the ability to feel our devices will become as important as seeing or hearing them. Whether it’s a gentle tap on the wrist or the rumble of a virtual engine, system haptics are turning the digital world into a truly tactile one.

Further Reading: