System Monitor: 7 Ultimate Tools for Peak Performance

Ever wondered why your server crashes at the worst time? A solid system monitor could be your digital guardian, silently watching every heartbeat of your IT infrastructure.

[ez-toc]

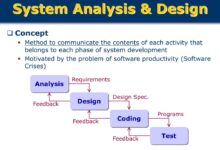

What Is a System Monitor and Why It Matters

A system monitor is a software tool or hardware device designed to track, analyze, and report the performance and health of computer systems. Whether it’s a single desktop or a sprawling cloud-based network, system monitoring ensures everything runs smoothly, efficiently, and securely. Without it, IT teams are essentially flying blind—reacting to outages instead of preventing them.

Core Functions of a System Monitor

At its core, a system monitor performs several critical functions that keep IT environments stable and responsive. These include real-time tracking of CPU usage, memory consumption, disk I/O, network activity, and process behavior. By continuously gathering this data, a system monitor helps identify bottlenecks before they become critical issues.

- Real-time performance tracking

- Alerting on abnormal behavior

- Historical data logging for trend analysis

For example, tools like Nagios have long been industry standards for monitoring server health across thousands of enterprises. These tools don’t just collect data—they make it actionable.

Types of System Monitoring

Not all monitoring is created equal. There are several distinct types of system monitoring, each serving a unique purpose:

- Hardware Monitoring: Tracks physical components like temperature, fan speed, and power supply status.

- Software Monitoring: Observes application performance, service uptime, and process execution.

- Network Monitoring: Analyzes bandwidth usage, latency, packet loss, and connectivity.

- Log Monitoring: Parses system and application logs to detect errors, security threats, or configuration changes.

“Monitoring is not about collecting data—it’s about understanding what that data means for your business.” — DevOps Engineer, Google Cloud

Top 7 System Monitor Tools in 2024

The market is flooded with system monitor solutions, but only a few stand out in terms of reliability, scalability, and ease of use. Here’s a breakdown of the top seven tools that dominate the landscape this year.

1. Nagios XI

Nagios XI remains one of the most powerful open-source system monitor platforms available. It offers deep visibility into servers, networks, and applications through customizable dashboards and robust alerting mechanisms. Its plugin architecture allows integration with virtually any system component.

- Supports hybrid and multi-cloud environments

- Extensive plugin library (over 5,000)

- Advanced alert escalation and notification options

Learn more at Nagios XI Official Site.

2. Zabbix

Zabbix is another open-source powerhouse known for its scalability and real-time monitoring capabilities. It’s particularly popular among large enterprises due to its ability to handle tens of thousands of metrics simultaneously.

- Auto-discovery of network devices

- Built-in visualization tools (graphs, maps, screens)

- Support for SNMP, IPMI, JMX, and custom scripts

Zabbix excels in environments where automation and self-service monitoring are key. Visit Zabbix.com for documentation and downloads.

3. Datadog

Datadog is a cloud-based system monitor that has gained massive traction among DevOps teams. It combines infrastructure monitoring with application performance management (APM), log management, and security monitoring in a single platform.

- Real-time dashboards with AI-powered anomaly detection

- Seamless integration with AWS, Azure, GCP, Kubernetes

- User-friendly interface ideal for non-technical stakeholders

Datadog’s strength lies in its ecosystem. With over 600 integrations, it can monitor everything from PostgreSQL databases to Slack message queues. Explore it at Datadoghq.com.

4. Prometheus + Grafana

This dynamic duo is a favorite among developers and SREs (Site Reliability Engineers). Prometheus collects and stores time-series data, while Grafana provides stunning visualizations and dashboards.

- Prometheus scrapes metrics via HTTP at regular intervals

- Grafana supports templated dashboards and panel plugins

- Ideal for containerized environments using Docker and Kubernetes

The combination is especially effective for microservices architectures. Get started at Prometheus.io and Grafana.com.

5. SolarWinds Server & Application Monitor (SAM)

SolarWinds SAM is a comprehensive system monitor tailored for enterprise IT teams. It provides deep application performance insights alongside traditional server monitoring.

- Pre-built templates for common applications (SQL Server, Exchange, etc.)

- Automated root cause analysis

- Capacity planning and forecasting tools

While it’s a paid solution, its ROI comes from reduced downtime and faster troubleshooting. More info at SolarWinds.com.

6. PRTG Network Monitor

Developed by Paessler, PRTG is an all-in-one system monitor that focuses heavily on network performance. It uses sensors to monitor bandwidth, uptime, and device health across wired and wireless networks.

- Over 200 sensor types available

- Auto-discovery of network devices

- Mobile app for remote monitoring

PRTG is user-friendly and scales well from small businesses to large corporations. Check it out at Paessler.com.

7. New Relic One

New Relic One goes beyond basic system monitoring by offering full-stack observability. It tracks infrastructure, applications, browser performance, and even mobile app behavior.

- AI-driven insights with anomaly detection

- Real User Monitoring (RUM) for frontend performance

- OpenTelemetry support for modern telemetry standards

New Relic is ideal for organizations embracing digital transformation. Learn more at Newrelic.com.

Key Metrics Tracked by a System Monitor

To truly understand system health, a system monitor must track a wide range of performance indicators. These metrics provide early warnings of potential failures and help optimize resource allocation.

CPU Usage and Load Average

CPU usage indicates how much processing power is being consumed at any given moment. A sustained high CPU usage (above 80%) can signal performance degradation or inefficient code.

- Load average shows the number of processes waiting for CPU time over 1, 5, and 15 minutes

- High load with low CPU may indicate I/O bottlenecks

- Tools like top, htop, and vmstat provide real-time CPU insights

For example, if a web server’s CPU spikes during peak traffic, a system monitor can trigger auto-scaling in cloud environments to maintain performance.

Memory Utilization

Memory (RAM) is critical for application responsiveness. A system monitor tracks both total memory usage and swap activity.

- High memory usage can lead to swapping, which slows down the system

- Memory leaks in applications can be detected over time

- Free vs. cached memory should be analyzed for accurate assessment

Linux systems often show high memory usage due to caching, but this isn’t necessarily a problem. A good system monitor distinguishes between active and cached memory.

Disk I/O and Latency

Disk performance is often the hidden bottleneck in server environments. A system monitor tracks read/write speeds, queue depth, and latency.

- High disk latency (>20ms) can degrade database performance

- Monitoring tools can alert on disk full conditions before they cause outages

- SSD vs. HDD performance differences should be accounted for

For instance, MySQL databases are highly sensitive to disk I/O. A sudden increase in write latency could indicate failing hardware or misconfigured queries.

How a System Monitor Enhances Security

While primarily seen as a performance tool, a system monitor plays a vital role in cybersecurity. Unusual system behavior often precedes security breaches.

Detecting Unauthorized Access

A sudden spike in CPU or network usage from an unknown process might indicate a crypto-mining malware infection. Similarly, unexpected login attempts or privilege escalations can be flagged by monitoring tools.

- Monitor /var/log/auth.log (Linux) or Security Event Log (Windows) for anomalies

- Set up alerts for failed login attempts or root access

- Correlate events across multiple systems for pattern detection

Tools like OSSEC integrate system monitoring with intrusion detection, providing real-time security alerts.

Monitoring File Integrity

Critical system files (e.g., /etc/passwd, boot loaders) should remain unchanged unless intentionally modified. A system monitor can use checksums (like SHA-256) to detect unauthorized changes.

- Tools like AIDE (Advanced Intrusion Detection Environment) perform file integrity checks

- Regular scans can catch rootkits or backdoors

- Integrate with SIEM systems for centralized logging

“The best defense is visibility. If you can’t see it, you can’t secure it.” — CISO, Fortune 500 Company

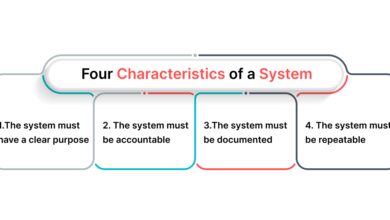

Best Practices for Implementing a System Monitor

Deploying a system monitor isn’t just about installing software—it’s about building a culture of proactive IT management.

Define Clear Monitoring Objectives

Before deploying any tool, organizations must define what they want to monitor and why. Is the goal to reduce downtime? Improve application performance? Meet compliance requirements?

- Identify critical systems and services (e.g., database servers, firewalls)

- Prioritize monitoring based on business impact

- Set SLAs (Service Level Agreements) for uptime and response times

For example, an e-commerce site might prioritize monitoring its payment gateway and inventory database over internal HR systems.

Use Thresholds and Smart Alerts

One of the biggest pitfalls in system monitoring is alert fatigue—receiving too many notifications, most of which are false positives.

- Set dynamic thresholds based on historical data (e.g., 95th percentile)

- Use hysteresis to prevent alert flapping

- Leverage machine learning for anomaly detection (e.g., Datadog, New Relic)

Instead of alerting on “CPU > 80%”, consider alerting only if it stays above 80% for more than 5 minutes and affects user response time.

Integrate with Incident Management

A system monitor should not exist in isolation. It must integrate with ticketing systems (like Jira), communication tools (like Slack), and ITSM platforms.

- Automatically create incidents when critical thresholds are breached

- Escalate alerts based on severity and time of day

- Link monitoring data to post-mortem reports for continuous improvement

This integration ensures that alerts lead to action, not just noise.

The Role of AI and Machine Learning in System Monitoring

The future of system monitoring is intelligent, predictive, and self-healing. Artificial intelligence is transforming how we interpret system data.

Anomaly Detection Using AI

Traditional threshold-based alerts are static and often miss subtle issues. AI-powered system monitors learn normal behavior patterns and flag deviations.

- Google’s SRE team uses AI to predict disk failures before they occur

- Datadog’s Anomaly Detection uses statistical models to identify unusual metric behavior

- Netflix’s Atlas platform uses machine learning for real-time service health scoring

These systems reduce false positives and help detect issues that humans might overlook.

Predictive Maintenance and Auto-Remediation

Advanced system monitors can now predict when a component will fail and even trigger automated fixes.

- Predict disk failure based on SMART data and usage patterns

- Auto-scale cloud resources before traffic spikes

- Restart hung services or clear cache automatically

For example, AWS CloudWatch can trigger Lambda functions to restart EC2 instances if they become unresponsive, minimizing downtime without human intervention.

Challenges and Limitations of System Monitoring

Despite its benefits, system monitoring comes with challenges that organizations must address.

Data Overload and Noise

Modern systems generate terabytes of monitoring data daily. Without proper filtering and aggregation, this data becomes overwhelming.

- Too many metrics can obscure real issues

- Poorly configured alerts lead to alert fatigue

- Storage costs for long-term retention can be high

Solution: Implement data sampling, use roll-up metrics, and apply intelligent filtering.

Complexity in Distributed Systems

In microservices and serverless architectures, tracking performance across dozens or hundreds of services is extremely complex.

- Distributed tracing is required to follow requests across services

- Correlation of logs, metrics, and traces (the “three pillars of observability”) is essential

- Tools like Jaeger and OpenTelemetry help standardize this process

Without proper tooling, debugging a failed transaction in a microservices environment can take hours.

Privacy and Compliance Risks

Monitoring tools often collect sensitive data, including user behavior, IP addresses, and system configurations.

- Must comply with GDPR, HIPAA, CCPA, and other regulations

- Encryption of monitoring data in transit and at rest is critical

- Access controls must be enforced to prevent unauthorized viewing

Organizations must balance visibility with privacy to avoid legal and reputational risks.

What is the best free system monitor for beginners?

Zabbix and Nagios Core are excellent free options for beginners. Both offer robust features, large communities, and extensive documentation. Zabbix is often considered easier to set up, while Nagios provides more flexibility through plugins.

Can a system monitor prevent server crashes?

While a system monitor cannot prevent hardware failures, it can help prevent crashes caused by resource exhaustion, software bugs, or configuration errors by providing early warnings and enabling proactive intervention.

How often should monitoring data be collected?

Collection frequency depends on the metric. Critical metrics like CPU and memory should be collected every 10-30 seconds. Less critical data can be collected every few minutes. Over-collecting can strain systems, while under-collecting may miss short-lived issues.

Is system monitoring necessary for small businesses?

Yes. Even small businesses rely on IT systems for email, websites, and customer data. A simple system monitor can prevent costly downtime and data loss, making it a smart investment.

What’s the difference between monitoring and observability?

Monitoring checks whether systems are working (e.g., uptime, CPU). Observability goes deeper, allowing you to understand *why* a system behaves a certain way by analyzing logs, metrics, and traces. Observability is about asking new questions, not just watching predefined ones.

Choosing the right system monitor is not just a technical decision—it’s a strategic one. From preventing downtime to enhancing security and enabling AI-driven insights, effective monitoring is the backbone of modern IT operations. Whether you’re a small startup or a global enterprise, investing in a robust system monitor pays dividends in reliability, performance, and peace of mind.

Recommended for you 👇

Further Reading: